by Thomas Talafuse, Lance Champagne, Erika Gilts

As presented at the 2019 Winter Simulation Conference

Abstract

Increased use of unmanned aerial vehicles (UAV) by the United States Air Force (USAF) has put a strain on flying training units responsible for producing aircrew. An increase in student quotas, coupled with new training requirements stemming from a transition to the MQ-9 airframe, impact the resources needed to meet the desired level of student throughput. This research uses historical data of a UAV flying training unit to develop a simulation model of daily operations within a training squadron. Current requirements, operations, and instructor manning levels are used to provide a baseline assessment of the relationship between unit manning and aircrew production. Subsequent analysis investigates the effects of course frequency, class size, and quantity of instructors on student throughput. Results from this research recommend novel approaches in course execution to more fully utilize instructor capacity and inform UAV flying training units on appropriate manning levels required to meet USAF needs.

Introduction

The 9th Attack Squadron (9 ATKS), located at Holloman Air Force Base, New Mexico, is responsible for training aircrew for the MQ-9 Reaper, a remotely piloted aircraft operated by two crews with differing roles. A pilot and sensor operator serve as the launch and recovery crew that perform takeoffs and landings, and an in-flight crew, consisting of a sensor operator and pilot, conduct operations in flight. The MQ-9 serves a multitude of operational roles ranging from air interdiction to combat search and rescue assistance. The 9 ATKS implements five different training courses to prepare operators for all aspects of MQ-9 operations.

Manning levels have challenged the ability of the 9 ATKS to provide adequate support to maintain flight proficiency for the permanent party aircrew, support required administrative tasks, and provide appropriate crew rest, while simultaneously satisfying the demands of the current number of students in training. With an anticipated influx of additional students for the Initial Qualification Training (IQT) syllabus in the coming years, the 9 ATKS wished to determine the number of instructor pilots needed to maintain coursework flow to ensure students graduate according to planning timelines. In addition to manning concerns, many other factors of a stochastic nature affect the ability to meet training requirements, including weather conditions, permanent party deployments, temporary duty assignments (TDY), maintenance, flying status, and leave.

This research effort sought to determine the number of operators the 9 ATKS can train annually through optimizing current manning, as well as determine the number of instructors required for the anticipated increase in students. Utilizing modeling and simulation, a comprehensive manning analysis sought to determine if current manning allowed student production needs to be met without over-tasking instructors, identify the aspects of training creating delays, and the projected number of instructors needed to meet future changes in Air Force demands.

Background and Relevant Literature

Background

There are multiple courses involved in MQ-9 training. The largest is the Basic Initial Qualification Course, also referred to as the Requalification/Transition Track 1 (IQT). IQT trains pilots and sensor operators new to the MQ-9, and consists of 93 training days, with 61 academic days and 32 flight days. The Requalification/Transition Track 2 (TX-2) is mandatory training for pilots and sensor operators that have been unqualified on the MQ-9 for more than 39 months and consists of 71 training days, with 49 academic days and 22 flight days. The Requalification/Transition Track 3 (TX-3) is required for pilots and sensor operators unqualified for less than 39 months, and consists of 43 academic days and 16 flight days for a total of 59 training days. Transition Track 4 (TX-4) trains qualified pilots or sensor operators to become qualified in the MQ-9 major weapons system. The TX-4 track is 44 training days with 28 academic days and 16 flight days. The final training track offered by the 9 ATKS is the Formal Training Unit (FTU) Instructor Upgrade Training (FIUT) course, used to upgrade crew members to instructors. The course runs for 22 days, consisting of seven academic days and 15 flying days. The 9 ATKS runs All five of the training tracks concurrently and year-round, excluding holidays and weekends.

As seen in the manning levels in Table 1, there are various classes of personnel within the 9 ATKS. The number of flying events each pilot is able to instruct per week is determined by their position availability. If a pilot does not have additional squadron requirements, they are able to instruct five flying events per week while maintaining appropriate crew rest. In addition to the skill sets associated with each class of personnel, the skill sets amongst pilots are varied, limiting what portion of each course an individual is qualified to instruct.

Table 1: 9 ATKS manning.

9 ATKS pilots are also responsible for daily manning of an operations supervisor, the supervisor of flight, and a launch and recovery crew. These additional positions require particular skill sets that limit the number of pilots that are able to serve in each position. Although the pilots are frequently flying as instructors, they also must maintain currency on the MQ-9 by flying once a month as the primary pilot. Similarly, sensor operators are limited on what they are able to instruct based on their position and availability.

Basic crew equivalence (BCE) is a measure used by the 9 ATKS to capture annual crew production. The 9 ATKS is expected to produce at least 120 BCE per year under normal operations. The BCE factor is weighted by syllabus type, with the IQT syllbus being the most heavily weighted. The BCE weight for crew completion, by syllabus, is seen in Table 2.

Table 2: BCE weights.

Relevant Literature

Historicalwork to address manning and scheduling have used linear (Winston 2004) and integer programming (Billionnet 1999), and have been applied to real-world scheduling problems (Ryan and Foster 1981; Ghalwash et al. 2016). While these methods have successfully been used, the complex, dynamic, and stochastic nature of this problem required many unacceptable assumptions to be made for these approaches.

Simulation has been widely used in application for scheduling optimization, allowing for factors to be represented with distributions more closely resembling the real-world problem opposed to a constant value. Banks et al. (2010) highlight that often a system model can be developed which can be solved with mathematical methods, but the complexity of the real-world system isn‘t captured as well as it can with a computer-based simulation. Simulating a system allows for study and analysis of aspects that can change over time, with multiple runs providing insight to the expected outcomes and their distributions. Once a simulation model is constructed, the user can alter multiple factors and predict system performance with those changes. Analysis of the output of a simulation based on changes in the input provides valuable information on the impact of the differing variables. The insights gained from a simulation can help identify areas that can be improved in the real-world system.

Thorough review of literature discovered simulation research techniques applicable to the situation faced by the 9 ATKS. Sepulveda et al. (1999) used simulation to improve processes in cancer treatment centers, where different types of patients visited multiple locations for treatment of their conditions by hospital staff with varying skill sets, leading to a 30% increase in patients serviced without changing staffing levels. Kumar and Kapur (1989) simulated emergency room scheduling to evaluate the effect of staffing level by shift length on the quality of service, while minimizing patient wait time and costs. Similar to the 9 ATKS instructing simultaneously running courses, the hospital staff was responsible for providing service across five different levels of emergency, while constrained by their shift lengths. Seguin and Hunter (2013) used a resource allocation planning tool to simulate the training operations for the Canadian Forces Flying Training School to analyze the training program, identify causes of delay, and minimize course completion time. The simulation accounted for weather, aircraft, simulators, different instructor types, as well as student performance. Simulation discovered flight simulator availability as the biggest contributor to delays and identified a block scheduling format as the best alternative to expedite course completion.

Jun et al. (1999) illustrate the benefits of simultaneously using simulation and optimization techniques for a multi-criteria objective function. April et al. (2003) state that nearly all modern software simulation packages provide a search for optimal values of input parameters as opposed a statistical estimation, and use evolutionary approaches to search the solution space and evolve a population of solutions. The OptQuest optimization software used by Simio utilizes this evolutionary approach, assessing the inputs and outputs of the simulation, combined with a user-provided starting point, precision level, stopping criteria, and objective function, to explore candidate values (April et al. 2003). Kleijnen and Wan (2007) utilized OptQuest to minimize inventory cost for an inventory management system, concluding that OptQuest provided an output that was the best estimate of the true optimum when compared to a modified response surface methodology.

With the complexity of the flight training scheduling for the MQ-9 community, a combination of simulation and optimization was used via Simio and its OptQuest feature. The software suite provided a capability to run experiments, generate multiple types of output data, and allow intuitive analyses for assessing optimal scheduling options for the 9 ATKS scheduling problem (Prochaska and Thiesing 2008).

Methodology

Model Formulation

To best address the research objectives and represent real-world operations of the 9 ATKS, a simulation was built using Simio object-oriented simulation software. Other approaches were considered, but a simulationbased approach was deemed most appropriate to understand current manning levels, impact on throughput, and forecast optimal manning for future uncertainties. The model represents key aspects of the day-to-day operations of the 9 ATKS. Each assigned, attached, reservist, or contractor instructor is represented in the simulation as a resource. The number of pilots and sensor operators associated with the 9 ATKS in October of 2018 was used as the representative manning. No two instructors in the 9 ATKS hold the same skill set or job requirements.

With a total of 132 instructors, modeling individualized scheduleswas impractical. Based upon historical means, an average weekly number of flying duties capacity was applied to each instructor type, allowing assigned, attached, reserve, and contractor instructors to complete five, two, four, and five events per week, respectively. Traditional reserve pilots flying one event per month were removed from the model altogether.

Under normal operations, pre-brief, post-brief, administrative, and crew rest requirements limit instructors to one flying event per day. Thus, an instructor is seized for the entirety of the eight-hour day for one flying event. Instructors can also be seized for non-flying specialty assignments. An entity arrives each day and seizes the appropriate instructor resources needed for additional duties. Additionally, historical data showed 12% of instructors are unavailable on any given duty due to TDY, leave, illness, and other military duties, captured by an additional entity randomly seize 16 instructors daily.

Instructors possess combinations of 14 different skill sets that determine what flights or academic courses they can instruct. Model selection of instructors reflects the 9 ATKS sourcing prioritization, captured by the resource list order.

Both MQ-9 aircraft and simulators are used for training purposes, with four of each on hand for daily use. The model treats these as resources, seized by students as they commence each training task. A typical days consists of each aircraft completing four flights and each simulator five flights, with these serving as a capacity on each equipment resource. Weather conditions and aircraft maintenance limits aircraft availability, while simulator availability is limited by maintenance downtime and software updates. The historical average operational time lost due to weather and maintenance is 23.6% and 3.5%, respectively. Simulators are not affected by weather, but are down 0.3% of the time for maintenance and software updates.

Weather effects are modeled by an entity seizing all aircraft on days when weather prevents flight, captured by a random draw. Maintenance of each type of aircraft is an individual reliability factor attached to each equipment resource. Failure is based on a usage count and the count between failures follows a uniform distribution for both aircraft and simulators. Downtime was modeled as a full day, making equipment inoperable for four and five flights, respectively, for aircraft and simulators. To simulate the 3.5% downtime on the aircraft as four flights, uptime per aircraft was 114 flights, and was modeled as a uniform distribution with a minimum of 104 and max of 124. The 0.3% simulator downtime was modeled as a uniform distribution with a max of 1,800 and minimum of 1,400 flights to capture the five flights of downtime.

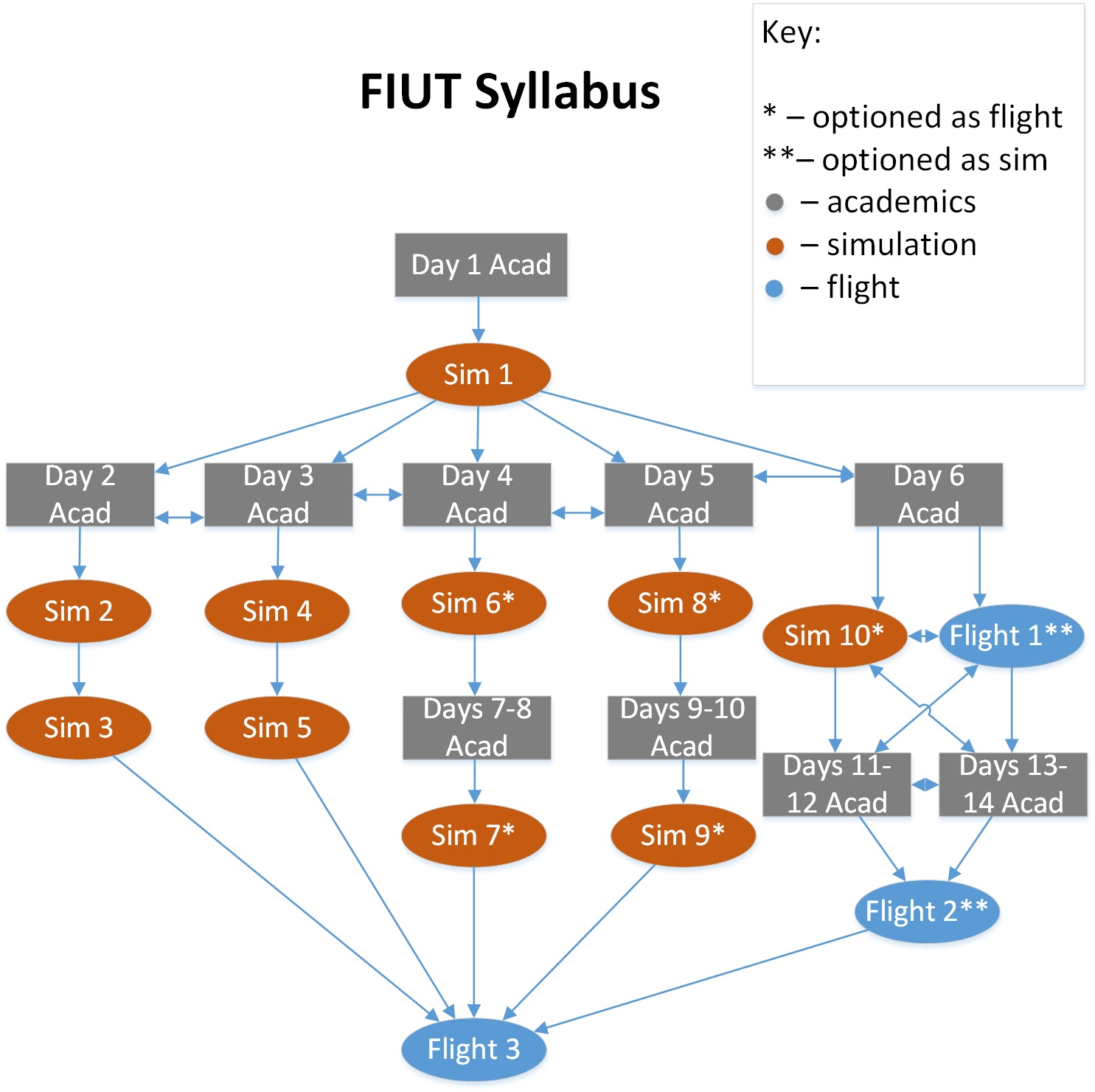

The syllabi for training programs break down events with the time (hours) required for each event. Events consist of academic courses, simulation flights, and flights in the aircraft, and documentation lists events to be completed on each training day (Department of the Air Force 2018). The five different courses are represented as servers that process entities. Within each server is a task table listing all events and the necessary progression for that syllabus, based upon the MQ-9 Training Manual, as illustrated in Figure 1. Each task table representing a syllabus contains the different aspects for each training event, including task name, processing time, predecessor tasks, probability of student failure, pilot resource requirement, sensor operator resource requirement, equipment requirement, starting task process, and a finish task process. Probabilities of student failure for each event are based upon historical data. All task processing times are set to eight hours, as they normally take an entire day to complete.

Some tasks can be completed in differing order, dependent on their prerequisite requirements. The predecessor component allows the students to complete the tasks in a variety of patterns, dependent on instructor and equipment availability. The pilot, sensor operator, and equipment requirements contain the list of applicable instructors or equipment (aircraft or simulator) for that particular event. For some events, only an in-class instructor is required, and a dummy resource is used as a filler for the other instructor requirement. Also, some academic days are strictly computer-based and are not reliant on any resources.

Figure 1: FIUT course flow.

The starting task for each training event is either an individual process completed by each student, an event completed as a set of two students (super-sortie), or an academic course that is completed as an entire class of students. The individual process checks that the resources are available for that student, and that the student is not currently completing other tasks. If both those criteria are met, the student seizes the appropriate resources for the duration of the task. For the super-sorties and academic class tasks, the start task process has student entities wait until the appropriate number of students are available. After the processing time has passed in the simulation, the finish task process is implemented. The finish task process is similar for all events; the student releases the resources seized for the task.

A random number draw is used to determine if a student failed the event. If the student fails, they have not met the predecessor requirement for the next task and will repeat the task until passed.

Entities represent a crew, comprised of a student pilot and student sensor operator, flowing through the events in each course. Student arrivals were determined by the historical average number of classes and size. For IQT, students arrive every 41 days, every 365 days for the TX-2 course, every 219 days for the TX-3 course, every 183 days for the TX-4 course, and every 37 days for the FIUT track. The historical average class size for the IQT course was ten students, six students for the TX-4 course, and the remaining courses all had an average class size of two.

Assumptions

Model assumptions are necessary to properly capture the real world aspects of the 9 ATKS that cannot be recreated in the simulation. Data provides instructor unavailability but does not decompose by reason. Therefore, the percentage of unavailable instructors was implemented as a deterministic per-day proportion of the entire instructor bank.

Weather also inhibits the ability to complete training. During the rainy season (July to September) many scheduled flights are either completed as a simulation or cancelled. Rather than implement the seasonal effect of weather, the model implements an overall average rate of flight cancellation due to weather. This probability is applied simultaneously to all aircraft.

Maintenance on aircraft and simulators also causes delays in training. Downtime for both are captured with a independent reliability factor built into each equipment resource. Uptime between failures is captured with a uniform distribution with an assumed downtime of one day.

The FIUT course is used to train students to become instructors of the other four courses taught. All of the flights required for the course are reliant on the IQT syllabus flow, as the FIUT students instruct the IQT students as part of their course requirements. This aspect of the FIUT training was not modeled due to the complexity of the real-world training situation. The delays experienced due to this reliance have been built into the FIUT course task table to represent the time delay.

Data Source, Model Inputs and Metrics

All data used for model input was provided by the 9 ATKS and 16th Training Squadron (16 TRS). The assistant director of operations of the 16 TRS oversees the 9 ATKS operations and maintains historical data on the number of students flowing through courses and instructor availability rates. The 16 TRS also provided flight cancellation rates due to weather and maintenance issues. Information on the number of instructors and their skill sets was provided by the 9 ATKS Director of Staff, which guided creation of the resource lists and the number of resources representative of current operations. The preferences of instructor selection was provided by the 9 ATKS scheduler, who also provided guidance on the average instructor utilization for additional duties, helping to create a more realistic model. The scheduler also provided the mean number of flights each type of instructor completed weekly and how frequently members must fly recertification flights.

The focus of the study was to provide insight on the number of instructors required to achieve a desired number of students completing training in a specified amount of time. For model validation and operations analysis, aircraft utilization, simulator utilization, and instructor utilization were recorded. In order to provide guidance on where the syllabi could potentially be improved, the time waiting to complete each task was recorded to identify which events in each syllabus were causing delays.

Verification and Validation

Throughout model construction, members of the 9 ATKS most familiar with the squadron functions were consulted to confirm the representations in the model were accurate. Student count was monitored with a visual display during test runs, showing the number of students currently in the course and the number that completed the course. This allowed for verification that classes were starting and ending together and that students were successfully completing the entire syllabus. Also, to establish a baseline, the number of days to complete the syllabus was recorded under perfect conditions, with no weather or maintenance cancellations and all instructors available. The time to complete the syllabus for each class of students directly matched the MQ-9 Initial Qualification and Requalification Training Course syllabus projection.

The model was validated by comparing the length of time to complete each syllabus with the historical results provided. The simulation was run with the same number of students as had historically gone through the different training courses. The simulation completed twenty runs for 730 days. The historical average number of days to complete each syllabus was compared to the average number of days needed for the simulated students to complete each syllabus. A modified two-sample-t confidence interval indicated no significant difference between the simulated mean completion time and the historical completion time.

Additionally, the historical number of students completing each syllabus in 2017-2018 was compared to the simulated number of students completing each syllabus. With almost identical throughput in real-world and simulated data across all syllabi, the model was validated as accurately capturing current operations.

Experiments

The 9 ATKS anticipates an increased demand for trained MQ-9 crews and, therefore, larger IQT class sizes. To capture the impact of increased class size on the squadron, experiments were run with a range of ten to 18 students per class. Furthermore, the 9 ATKS was interested in knowing the impact on student flow with an increase of instructors, leading to an experiment increasing the current instructors up to 100% of authorized levels, an increase of up to 18 for both pilots and sensor operators. Another alternative investigated was an increase in class frequency. An experiment was run with class interarrivals decreasing from every 50 days to 40 and 30 days, Instructor levels were held constant and class size varied from 10 to 18 students. The results of the experiments were used

Analysis

Baseline Analysis

After validation, model inputs were updated to reflect typical IQT class size, with seven classes being taught annually. Twenty replications of the updated model reflecting normal operations was run for 730 days, with input parameters listed in Table 3. The output data were analyzed to determine resource utilization rates. Overall utilization rate by equipment type, indicate aircraft and simulators have utilization rates of 50.2% and 57.9%, respectively. This indicated availability of these resources under normal operations are not limiting factors on student throughput.

Table 3: Baseline parameter settings.

The average utilization rates of instructors, by skill set, include the 12% of time they are being seized for tasks outside of instruction and are noticeably higher than aircraft and simulator resources. Instructor pilots qualified as Operations Supervisor (TOP 3), Additional Duty Flight Evaluator/Flight Evaluator (ADFE/FE) , Launch and Recovery (LR) and all advanced skills are utilized over 90% of the time. Designated Instructors (DI), who are used most heavily for the FIUT courses, have a utilization rate of nearly 86%. Instructors qualified only for simulators are used 77%. Sensor operator utilization rates are lower than pilot utilization rates due to the smaller number of additional duties they have to fulfill. Many of the instructors hold multiple qualifications, and therefore the utilization rates are not mutually exclusive.

The effect of additional instructors on IQT student throughput was analyzed by incrementing three additional pilots and sensor operators of various skill sets per increase. As expected, increases in instructors provided a higher average number of students completing per year and decreased the mean time to complete the course. A paired-t confidence interval was used to identify significant differences in the average number of students completing the IQT syllabus in a year and the average completion time. The baseline model was analyzed against each increase of instructor, as well as each pairwise comparison between instructor increases. Significant differences from the baseline are observed when six or more instructors are added, as seen in Table 4. Increases beyond six instructors do not result in a significantly larger number of students completing the syllabus in a year, but reduces syllabus completion time, while addition beyond nine yields no further time improvement. It is reasonable to assume that the tapering of improvement can be attributed to instructors no longer being the limiting factor in student throughput.

Student wait times for each task were also collected. Of the 72 tasks in the IQT syllabus, 33 had a mean wait time exceeding one day, with 19 being academic courses requiring the entire class to complete together. Task 39 has the longest mean wait time and follows a series of seven flights, of which two have the 2509

Table 4: Instructor increase comparisons.

highest failure rate in the syllabus. A majority of training delays occur for academic courses requiring the class to complete simultaneously, with academic courses accounting for 55.1% of the waits experienced, while flights in aircraft and simulators comprised 24.2% and 20.7% of the wait time, respectively. Table 5 provides the mean and longest observed wait for tasks exceeding a mean wait of two days.

Table 5: IQT tasks with mean wait time exceeding one day.

Under normal effort level, the 9 ATKS is expected to produce 120 BCE annually. Annual BCE throughput, by syllabus, in the baseline simulation is listed in Table 6. Under normal operations, with current manning levels, historical class sizes and frequency, the goal of 120 BCE is achieveable.

Table 6: Baseline BCE.

The 9 ATKS was also interested in estimating throughput if contractor support was replaced with military manning. Current manning included 15 contractor pilots and 18 contractor sensor operators, of which eight and seven, respectively, were certified to only instruct in the simulators. The simulation was run for 730 days with 20 replications of each scenario using only the non-contractor instructors. Each scenario increased the number of pilot and sensor operator instructors by three up to an addition of 24 of each type of instructor being added to the model. From this anaysis, it was evident that the contractor force has an impact on student throughput. A paired-t confidence interval identified that an additional 12 to 15 of both military pilots and sensor operators would be needed to achieve similar throughput and completion times as currently achieved should the contractor force be removed, as seen in Table 7.

Table 7: Baseline instructor increase comparison without contractors.

Experiments

With interest focused on increasing student throughput for the IQT syllabus, the two response factors that best indicate student throughput are the number of students completing the syllabus in a year and the course completion time. Two controllable factors impacting student throughput are an changes to the number of instructors and class size. An experimental design was implemented to identify levels of instructor increase and class size that would have the largest impact on student throughput.

The range of class sizes for consideration was based upon historical data and anticipated future class size. The range for instructor manning was based upon the allowable manning levels authorized, and ranged from none to an increase of 18 to both pilots and sensor operators. The levels selected for each factor are listed below in Table 8. A central composite design with axial points on the face and an additional center point was used to analyze the different levels of instructor and student class size.

Table 8: Factor levels for design of experiments.

Ten scenarios were analyzed with a full second-order model using JMP. In order to satisfy response surface assumptions, a Box-Cox transformation was applied to the response, which adequately addressed model validity concerns. Equations (1) and (2) provide the reduced response surface model in coded space for the annual number of students complete and the average time to complete the IQT syllabus.

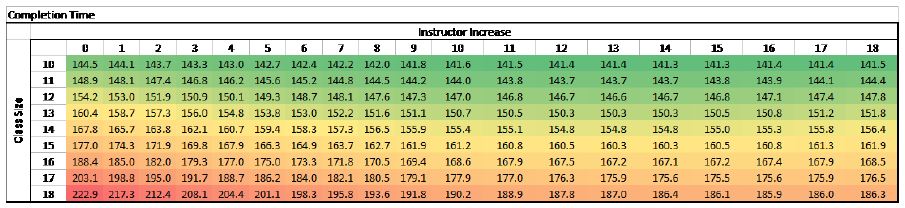

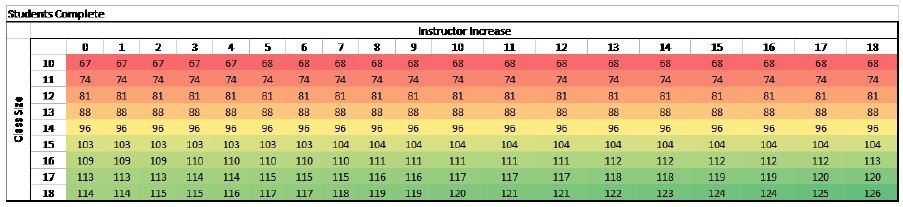

Heat maps were created with a range of possible combinations for each prediction equation. Figure 2 illustrates that shortest completion times are achieved when class sizes are smaller and more instructors are added. Student throughput is maximized when the class sizes are largest, but are not drastically impacted by increases in instructors, as depicted in Figure (3).

To further analyze the relationship between class size, instructors, student throughput and time to complete the IQT syllabus, the OptQuest add-in feature was utilized. Class size incremented in twos, while the increase of instructors was set to increments of three. Each scenario OptQuest developed was run a minimum of ten times to produce an average student throughput and completion time. Each objective was individually optimized. When maximized, student throughput was 125 in 186.7 days with 18 additional instructors and class sizes of 18. Minimizing completion time yielded 140.6 days, with 68 students completing the IQT course in a year. This was achieved with class sizes of 10 and an additional 12 instructors. These results were compared to the baseline model of class sizes of 16 and no added instructors, with both responses significantly different.

Figure 2: Completion time heat map.

Figure 3: Student throughput heat map.

The two objectives were normalized and equally weighted for the multi-objective feature of OptQuest. All experimental scenarios considered were run and terminated with a class size of 16 and increase of instructors by 15 as the optimal solution. Those variable values produced an average student throughput of 116 and average student completion time of 167.3 days. At this optimal solution, the deviation in the number of students complete is ten students from the best throughput of 126, while the completion time shows a deviation of 26 days from the best completion time of 141.3 days. The solutions found using the response surface prediction equations were similar when assessing objectives individually. However, when using OptQuest, only scenarios that are capable of being processed by the model are run, which limits the solution space to only include even class sizes and the addition of instructors in increments of three.

During surge efforts, the 9 ATKS is expected to produce 140 BCE annually. With the other four courses held constant, this requires 125 students to complete the IQT syllabus in the year. As seen in Figure 3, such a requirement requires a class size of 18 and instructor increase of 17 or 18. Under current manning, an increase in class size only completes an average of 114 students annually and increases instructor utilization by 3%. As such, exploration on the frequency of classes was investigated. Through adjustments to frequency and class size, a design of experiments was completed to identify effects on student throughput. Class size levels remained the same as in Table 8, while the coded values for course frequency ranged from 30 to 50 days. A central composite design with axial points on the face and two center points was used to analyze the different arrival rate and student class size.

Second order models measuring effects on student throughput and completion time were analyzed. Equations (3) and (4) provide the reduced response surfaces in coded space for throughput and completion time, respectively.

Student throughput was maximized with a class frequency of 30 days and class sizes of 14, yielding 126 students annually and average completion time of 272.1 days. Completion time was minimized with ten students arriving every 49 days, resulting in 69 students completing annually and an average completion time of 145 days. Optimal solutions for both objectives produced mean throughput and completion time significantly different from the baseline. Heat maps, seen in Figures 4 and 5, demonstrate the range of combinations for throughput and completion times. More importantly, this illustrates that changes to frequency and class size can meet the 125 student surge requirement without any additional manning.

Figure 4: Completion time heat map.

Figure 5: Student throughput heat map.

Simulating the maximal throughput of 126, achieved with a class size of 14 arriving every 30 days, pilot and sensor operator utilization was 94.1% and 77.8%, respectively, an increase of 5.5% and 2.9% from baseline operations, but is capable of producing 18 more MQ-9 crews per year on average.

Conclusions and Future Research

Model analysis and results are valuable to decision makers as they may need to adjust multiple factors to meet AF demand for MQ-9 crews. The simulation successfully provided the means to analyze the 9 ATKS operations without disrupting ongoing training. From the scenarios tested in the simulation, multiple regression equations were developed that can be used to provide predictions on student throughput and course completion time. Insight into the real-world system was gained by analyzing different aspects of the simulation when settings were representative of current manning and operations. Assumptions and simplifications were necessary to represent the real-world system in the discrete event simulation, and therefore the results are reliable, but not precise.

Current manning levels are capable of exceeding the desired student annual throughput. However, this requires high utilization of the most qualified pilots. While students were occasionally delayed due to course flights on either the aircraft or simulator, academics caused the largest delays, largely due to requiring students complete academic events as a class.

Analysis determined that class size was more influential on student throughput than the number of instructors. With no changes to the number of IQT courses per year, an additional 17-18 personnel, to both pilot instructors and sensor operators, would be necessary to meet the goal of 125 IQT students completing the course annually. Such an increase in manning to 100% of authorized levels would be capable of satisfying a demand of 140 BCE. However, this can be met without manning increases through adjustment in class sizes and increases in arrival frequency.

Future efforts would be enhanced through increasing model fidelity from daily operations to hourly operations. This would provide a more accurate assessment of utilization of instructors, allow instructors to complete more than one flying event each day. Additionally, this would allow individual academic courses to be modeled using specific instructors for each lesson. Increased and continual data collection on failure rates per event can provide greater accuracy in projecting overall student completion. More specific data collection on instructor availability could allow for individualized down times. Impact of varying objective weightings could be simulated to provide insight on prioritization. Additionally, an arrival distribution could be determined for use in the model to more accurately capture the variation in class arrivals.

Disclaimer

The views expressed in this article are those of the authors and do not reflect the official policy or position of the United States Air Force, Department of Defense, or the U.S. Government.

Author Biographies

THOMAS TALAFUSE is an assistant professor of Operations Research in the Department of Operational Sciences at the Air Force Institute of Technology and holds a PhD from the University of Arkansas in Industrial Engineering. His research interests include reliability, risk analysis, and applied statistics. His email address is tom.talafuse@gmail.com.

LANCE CHAMPAGNE earned a BS in Biomedical Engineering from Tulane University, MS in Operations Research and PhD in Operations Research from the Air Force Institute of Technology (AFIT). He is an Assistant Professor of Operations Research in the Department of Operational Sciences at the Air Force Institute of Technology with research interests including agent-based simulation, combat modeling, and multivariate analysis techniques. His email address is lance.champagne@afit.edu.

ERIKA GILTS is an active duty operations research analyst in the United States Air Force assigned to Ramstein AFB, Germany. She earned a BA in Math Education from Anderson University and MS in Operations Research from the Air Force Institute of Technology. Her research interests include modeling and simulation, military operations analysis, text mining, and applied statistics. Her email address is erika.gilts@gmail.com.